Disruptioneering

Hybrid AI

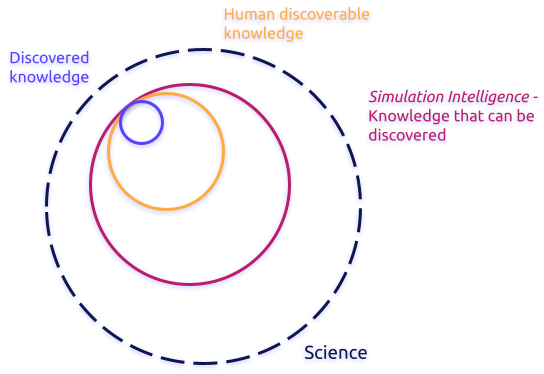

From the simulation intelligence perspective, hybrid AI has several meanings:

- Semi-mechanistic ML, to integrate domain knowledge and data-driven learning (for example, differential equations and neural networks).

- Physics-infused ML, a new class of methods that integrates physics and ML in ways that can improve the performance and understanding of both.

- Neurosymbolic computing, or the principled integration of neural network-based learning with symbolic knowledge representation and logical reasoning.

"Active" science

SI can bring about new scientific workflows that are iteratively guided by intelligent algorithms and agents, promising higher efficiency and more optimal solutions than traditional approaches.

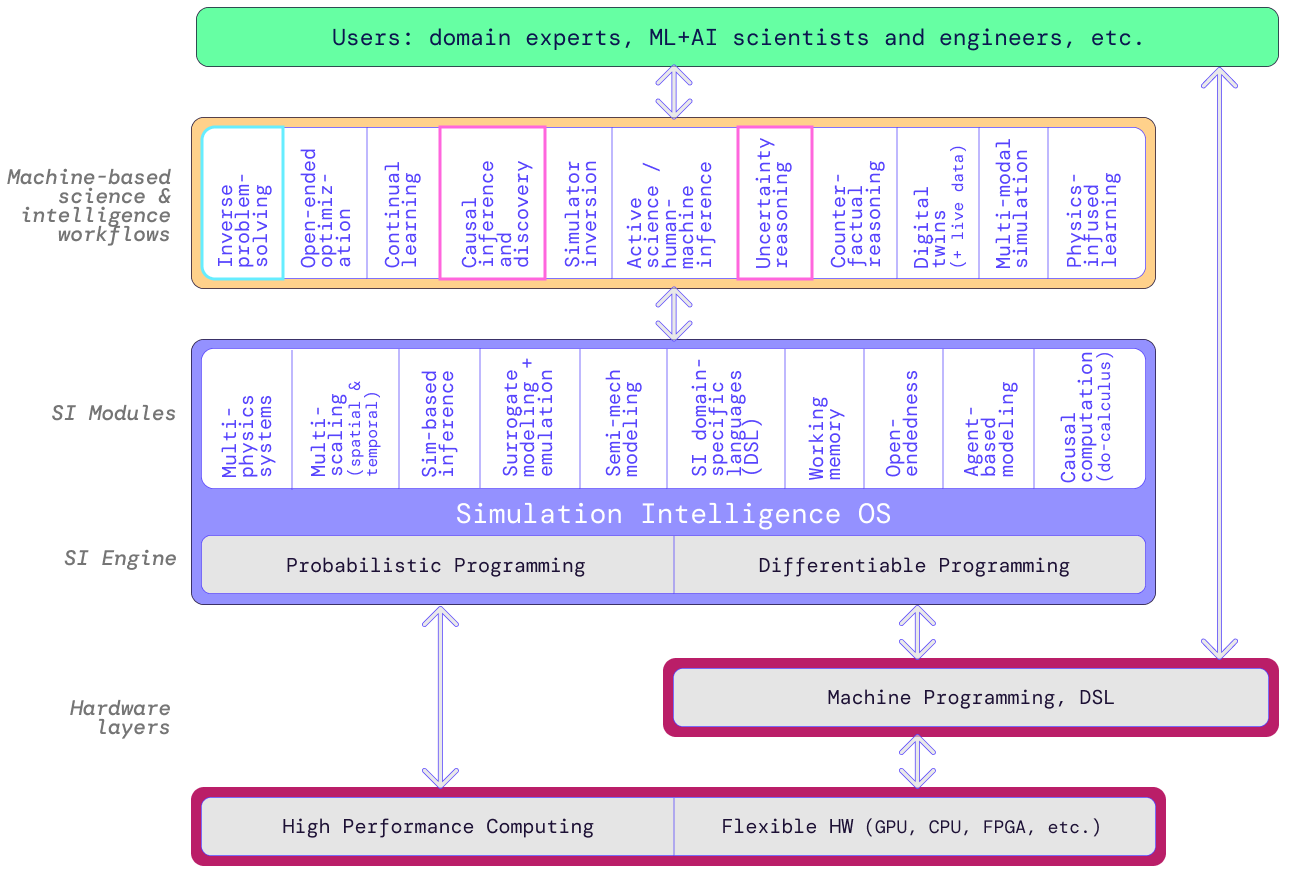

As detailed in Lavin et al '21, these methods we're building into an integrated computing Platform give rise to AI-guided, data-driven science and engineering:

- Human-machine inference

- Active causal discovery

- Online experiment design and optimization

- Inverse problem solving

- Open-ended search and lifelong learning

Use-inspired R&D

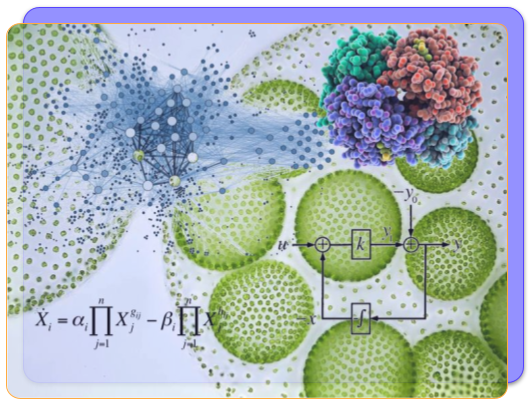

Simulation testbeds

We build in-silico playgrounds for human experts and AI agents to design and experiment with state-of-art efficiency and fidelity, simulating counterfactuals and understanding downstream effects before deployment.

To build such systems, we innovate in several key areas:

mechanisms for high- and low-level causal reasoning,

multi-physics and multi-scale modeling,

integration of simulators and online data streams,

continuous learning algorithms,

integration of learning theory and physical theory (towards bespoke computational primitives and domain-specific languages (DSL)), and more.

Across physical and life sciences, our simulation testbeds are necessary for advancing many promising technologies from lab concepts—across the "valley of death"—to scalable, real-world solutions.

Digital Twin Earth

We define digital twins in the scientific sense of simulating the real physics and data-generating processes of an environment or system, with sufficient fidelity such that one can run queries and experiments in-silico to reliably understand and intervene on the real-world.

With a consortium of partners at NASA Frontier Development Lab (nasa.ai), we are building highly efficient AI-driven simulators of Earth systems at all scales — from hyperlocal flood simulations to macro effects of solar weather, from wildfires in Australia to ice sheets in the Arctic, and so on.

Software 3.0

Probabilistic & Dynamic Computing

We build technologies for probabilistic reasoning, uncertainty-aware computation and AI, and reliable cyberphysical systems. Playing a key role is probabilistic programming, an AI paradigm that equates probabilistic generative models with executable programs, thereby enabling rich and complex models to be flexibly built with programming constructs.

Not to mention dynamic computing: when inputs and environments change over time, software has a varying amount of correctness and efficiency wrt input.

These, along with probabilistic numerics and continual learning, can help inform the design of new computing paradigms and new definitions of reliability in this increasingly uncertain, data-driven world.

Machine Programming

SI pushes us to rethink the full hardware-software stack, presenting valuable opportunities to build primitives for learning, simulating, and reasoning; for instance, we're building forward-backward differentiability up-and-down the stack. Machine programming is a new class of technologies that can understand code of myriad types across platforms, auto-compose them into working systems, and then optimize these systems to execute on the underlying (heterogeneous) hardware; MP aims to automate and optimize software development.